The Ultimate On-Site SEO Guide for Your Online Store

eCommerce Search Engine Optimization – The Ultimate On-Site SEO Guide…

As bloggers and Store Owners we want to make our websites appeal to both search engines and users.

A search engine ‘looks’ at a website quite differently to us humans. Your website design, fancy graphics and well written blog posts count for nothing if you have missed out the critical on-site SEO covered in this post.

SEO is not dead.

Modern SEO is not about fooling the search engines – it is about working with the search engines to present your products and content in the best possible way.

In this post, SEO Expert -Itamar Gero shows us exactly how…

The Ultimate On-Site SEO Guide for store owners and bloggers

Ecommerce is huge business. According to the United States Census Bureau, ecommerce sales in the US alone hit $394.9 billion in 2016. The rising trend in eCommerce sales and its influence on offline retail is expected to continue well into the 2020s worldwide.

Understandably, online store owners are jockeying for position to take ever-bigger pieces of the eCommerce pie. To that end, SEO has always been a top priority for most eCommerce marketing strategies. Search engines drive the most qualified and motivated traffic that’s easier to convert into paying customers than visitors from other channels. It’s why websites are waging a constant “war” behind the scenes to outrank each other for keywords related to their products and services.

And while having lots of competitors is daunting, you can take comfort in the fact that a lot of eCommerce sites aren’t doing their SEO right. With a solid grasp of SEO best practices and lots of hard work, you can gain high search visibility without breaking the bank.

SEO Guide To Your Online Store – Remove The Headache!

Special Note about this SEO Guide:

This SEO guide and tutorial is one of the most in depth we have ever produced. [Over 7000 words and 20+ images]

It is easy to be overwhelmed and to feel muddled with SEO but in reality it is much more straightforward that it may initially appear.

The rewards for getting Search Engine Optimization right are HUGE!

In addition while this guide is particularly focused on eCommerce SEO, much of what we detail here is equally applicable to your blog or company website.

Two SEO resources you will need:

What is Ecommerce SEO?

Simply put, ecommerce SEO is the process of optimizing an online store for greater visibility on search engines. It has four main facets, including:

- Keyword research

- On-site SEO

- Link Building

- Usage Signal Optimization

In this post, I’ll tackle the most foundational and arguably the most crucial among the four areas: on-site SEO. In our experience, working with 1000s of agencies, we can attribute the greatest impact on overall organic traffic growth to optimizations that we did within the ecommerce sites we handle.

While link building and other off-page SEO activities are important, on-site SEO sets the tone for success each and every time.

The Resurgence of On-Site SEO

On-site SEO is the collective term used to describe all SEO activities done within the website. It can be divided into two segments: technical SEO and on-page SEO. Technical SEO mostly deals with making sure that the site stays up, running and available for search bot crawls. On-page SEO, on the other hand, is geared more towards helping search engines understand the contextual relevance of your site to the keywords you’re targeting.

I’m focusing on on-site SEO today because of the undeniable resurgence it’s been having during the past 3 years or so. You see, the SEO community went through a phase in the late 2000s to around 2011 when everyone was obsessed with the acquisition of inbound links. Back then, links were far and away the most powerful determinant of Google rankings. Ecommerce sites that were locked in brutal ranking battles were at the core of this movement and competition eventually revolved around the matter of who’s able to get the most links – ethically or otherwise.

Eventually, Google introduced the Panda and Penguin updates which punished a lot of sites that proliferated link and content spam. Quality links that boosted rankings became harder to come by, making on-site SEO signal influence more pronounced over the rankings. The SEO community soon started seeing successful ecommerce SEO campaigns that focused more on technical and on-page optimization than heavy link acquisition.

This post will show you what you can do on your site to take advantage of the on-site SEO renaissance:

Technical SEO

As mentioned earlier, technical SEO is mostly about making sure your site has good uptime, loads fast, offers secure browsing to users and facilitates good bot and user navigation through its pages. Here’s a list of things you need to monitor constantly to ensure a high level of technical health:

The Robots.txt File

The robots.txt file is a very small document that search bots access when they visit your site. This document tells them which pages can be accessed, which bots are welcome to do so and which pages are off-limits. When robots.txt disallows access to a certain page or directory path within a website, bots from responsible sites will adhere to the instruction and not visit that page at all. That means disallowed pages will not be listed in search results. Whatever link equity flowing to them is nullified and these pages will not be able to pass any link equity as well.

When checking your robots.txt file, make sure that all pages meant for public viewing don’t fall under any disallow parameters. Similarly, you’ll want to make sure that pages which don’t serve the intent if your target audience are barred from indexing.

Having more pages indexed by search engines may sound like a good thing, but it really isn’t. Google and other web portals constantly try to improve the quality of listings displayed in their SERPs. (search engine results pages)

Therefore, they expect webmasters to be judicious in the pages they submit for indexing and they reward those who comply.

In general, search engine queries fall under one of three classifications:

- Navigational

- Transactional

- Informational

If your pages don’t satisfy the intents behind any of these, consider using robots.txt to prevent bot access to them. Doing so will make better use of your crawl budget and make your site’s internal link equity flow to more important pages. In an ecommerce site, the types of URLs that you usually want to bar access to are:

- Checkout pages – These are series of pages that shoppers use to choose and confirm their purchases. These pages are unique to their sessions and therefore, are of no interest to anyone else on the Internet.

- Dynamic pages – These pages are created through unique user requests such as internal searches and page filtering combinations. Like checkout pages, these pages are generated for one specific user who made the request. Therefore, they’re of no interest to most people on the Web, making the impetus for search engines to index them very weak. Further, these pages eventually expire and send out 404 Not Found responses when re-crawled by search engines. That can be taken as a signal of poor site health that can negatively impact an online store’s search visibility.

Dynamic pages can easily be identified by the presence of the characters “?” and “=” in their URLs. You can prevent them from being indexed by adding a line in the robots.txt file that says something like this: disallow: *?

- Staging Pages – These are pages that are currently under development and unfit for public viewing. Make sure to set up a path in your site’s directory specifically for staging webpages and make sure the robots.txt file is blocking that directory.

- Backend pages – These pages are for site administrators only. Naturally, you’ll want the public not to visit the pages – much less find them in search results. Everything from your admin login page down to the internal site control pages must be placed under a robots.txt restriction to prevent unauthorized entry.

Note that the robots.txt file isn’t the only way to restrict the indexing of pages. The noindex meta directive tag, among others, can also be used for this purpose. Depending on the nature of the deindexing situation, one may be more appropriate than the other.

The XML Sitemap

The XML sitemap is another document that search bots read to get useful information. This file lists all the pages that you want Google and other spiders to crawl. A good sitemap contains information that gives bots an idea of your information architecture, the frequency at which each page is modified and the whereabouts of assets, such as images, within your domain’s file paths.

While XML sitemaps are not a necessity in any website, they’re very important to online stores due to the number of pages that a typical ecommerce site has. With a sitemap in place and submitted to tools like Google Search Console, search engines tend to find and index pages that are deep within your site’s hierarchy of URLs.

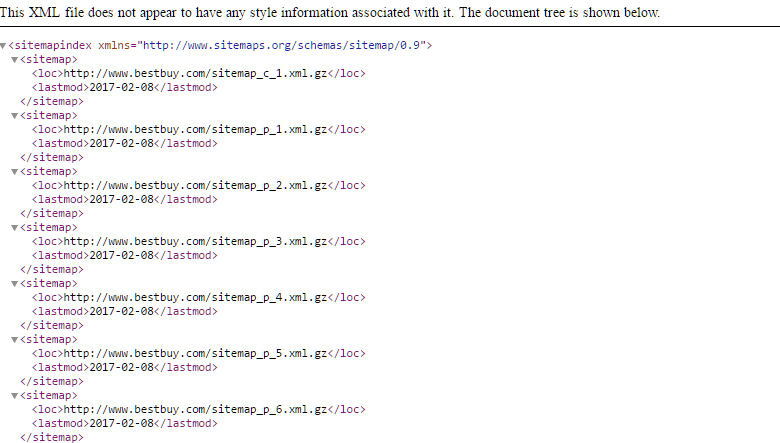

Your web developer should be able to set up an XML sitemap for your ecommerce site. More often than not, ecommerce sites already have it by the time their development cycles are finished. You can check this by going to [www.yoursite.com/sitemap.xml]. If you see something like this, you already have your XML sitemap up and running:

Having an XML Sitemap isn’t a guarantee that all the URLs listed on it will be considered for indexing.

Submitting the sitemap to Google Search Console ensures that the search giant’s bot finds and reads the sitemap. To do this, simply log in to your Search Console account and find the property you want to manage. Go to Crawl>Sitemaps and click the “Add/Test New Sitemap” button on the upper right. Just enter the URL slug of your XML sitemap and click “Submit.”

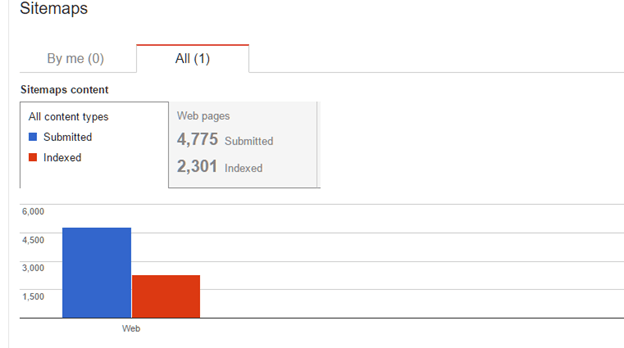

You should be able to see data on your sitemap in 2-4 days. This is what it will look like:

Notice that the report tells you how many pages are submitted (listed) in the sitemap and how many Google indexed. In a lot of cases, ecommerce sites will not get every page they submit in the sitemap indexed by Google. A page may not be indexed due to one of several reasons including:

- The URL is Dead – If a page has been deliberately deleted or is afflicted with technical problems, it will likely yield 4xx or 5xx errors. If the URL is listed in the sitemap, Google will not index a page from a URL that is not working properly. Similarly, if a once-live page that’s listed in the sitemap goes down for long periods of time, it may be taken off the Google index.

- The URL is Redirected – When a URL is redirected and is yielding either a 301 or a 302 response code, there’s no sense to have it in the sitemap. The redirect’s target page should instead be listed if it’s not there already. If a redirecting URL is listed in a sitemap, there’s a good chance Google will simply ignore it and report it as not indexed.

- The URL is being Blocked – As discussed under the robots.txt section, not all pages in an ecommerce site need to be indexed. If a webpage is being blocked by robots.txt or the noindex meta tag, there’s no sense listing it on the XML sitemap. Search Console will count it as not being indexed precisely because you asked it not to be.

Checkout pages, blog tag pages and other product pages with duplicate content are examples of pages that need not be listed in the XML sitemap.

- The URL has a Canonical Link – The rel=canonical HTML tag is often used in online stores to tell search engines which page they want indexed out of several very similar pages. This often happens when a product has multiple SKUs with very small distinguishing attributes. Instead of having Google choose which one to display on the SERPs, webmasters gained the ability to tell search engines which page is the “real” one that they want featured.

If your ecommerce site has product pages that have the rel=canonical element, there’s no need to list them on your sitemap. Google will likely ignore them anyway and honor the one they’re pointing to.

- The Page has Thin Content – Google defines thin content as pages with little to no added value. Examples include pages with little to no textual content or pages that do have text but are duplicating other pages from within the site or from elsewhere in the web. When Google deems a page as being thin, it either disfavors it in the search results or ignores it outright.

If you have product pages that carry boilerplate content lifted from manufacturer sites or other pages from your site, it’s usually smart to block indexing on them until you have the time and manpower to write richer and more unique descriptions. It also follows that you should avoid listing these pages on your XML sitemap just because they’re less likely to be indexed.

- There is a Page-Level Penalty – In rare instances, search engines might take manual or algorithmic actions against sites that violate their quality guidelines. If a page is spammy, or has been hacked and infused with malware, it may be taken off the index. Naturally, you’ll want pages like these off your sitemap.

- The URL is Redundant – Duplicate URLs in the XML sitemap, as you may expect, will not be listed twice. The second one will likely be ignored and left off the index. You can solve this issue by opening your sitemap on your browser and saving it as an XML document that you can open in Excel. From there, go to the Data tab. Highlight the column where the URLs are in your sitemap and click on remove Duplicates.

- Restricted Pages – Pages that are password-protected or are only granting access to specific IPs will not be crawled by search engines and therefore not indexed.

The fewer inappropriate pages you list in your sitemap, the better your submission to indexing ratio will be. This helps search engines understand which pages within your domain hold the highest degrees of importance, allowing them to perform better for keywords they represent.

Crawl Errors

In online stores, products are routinely added and deleted depending on a lot of factors. When indexed product or category pages are deleted, it doesn’t necessarily mean that search engines automatically forget about them. Bots will continue to attempt crawls of these URLs for a few months until they’re fixed or taken off the index due to chronic unavailability.

In technical terms, crawl errors are pages that bots can’t successfully access because they return HTTP error codes. Among these codes, 404 is the most common but others in the 4xx range apply. While search engines recognize that crawl errors are a normal occurrence in any website, having too many of them can stunt search visibility. Crawl errors tend to look like loose ends on a site which can disrupt the proper flow of internal link equity between pages.

Crawl errors are usually caused by the following occurrences:

- Deliberately deleted pages

- Accidentally deleted pages

- Expired dynamic pages

- Server issues

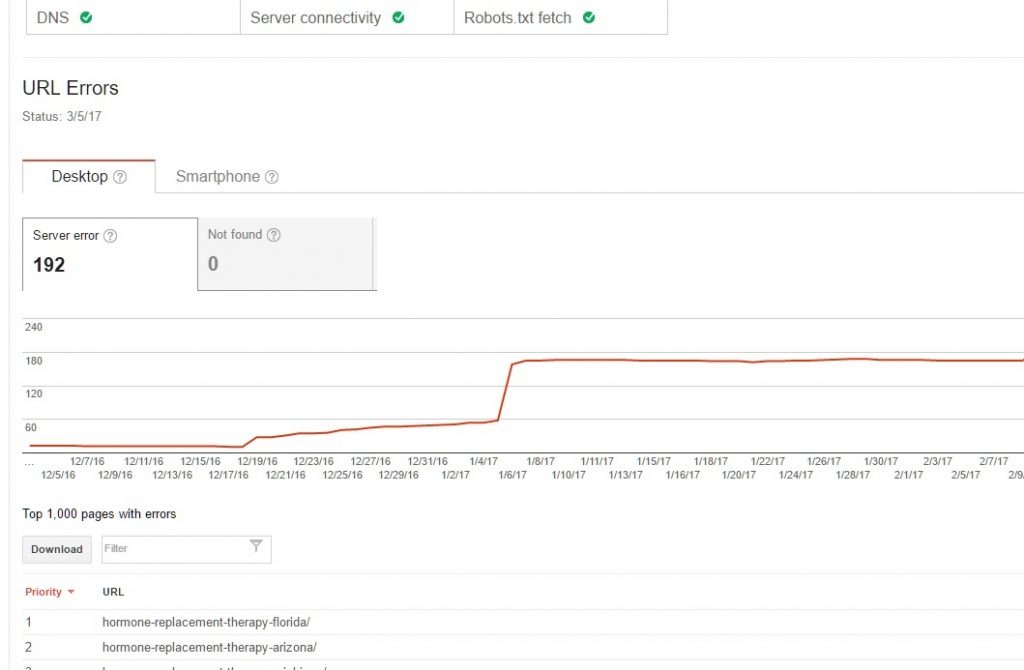

To see how many crawl errors you have in your ecommerce site and which URLs are affected, you can access Google Search Console and go to the property concerned. At the left sidebar menu, go to Crawl>Crawl Errors. You should see a report similar to this:

Depending on the types of pages you find in your crawl error report, there are several distinct ways to tackle them, including:

- Fix Accidentally Deleted Pages – If the URL belongs to a page that was deleted unintentionally, simply re-publishing the page under the same web address will fix the issue.

- Block Dynamic Page Indexing – As recommended earlier, dynamic pages that expire and become crawl errors can be prevented by blocking bot access using robots.txt. If no dynamic page is indexed in the first place, no crawl error will be detected by search engines.

- 301 Redirect the Old Page to the New Page– If a page was deleted deliberately and a new one was published to replace it, use a 301 redirect to lead search bots and human users to the page that took its place. This not only prevents the occurrence of a crawl error, it also passes along any link equity that the deleted page once held. However, don’t assume that the fix for every crawl error is a 301 redirect. Having too many redirects can affect site speed negatively.

- Address Server Issues – If server issues are the root cause of downtime, working with your web developer and your hosting service provider is your best recourse.

- Ignore Them – When pages are deleted deliberately but they’re of no great importance and no replacement page is planned, you can simply allow search engines to flush them out of the index in a few months.

Having as few crawl errors as possible is a hallmark of responsible online store administration. A monthly check on your crawl error report should allow you to stay on top of things.

SEO Guide To Broken Links

Broken links prevent the movement of search spiders from page to page. They’re also bad for user experience because they lead visitors to dead ends on a site. Due to the volume of pages and the complex information architectures of most ecommerce sites, it’s common for broken links to occur here and there.

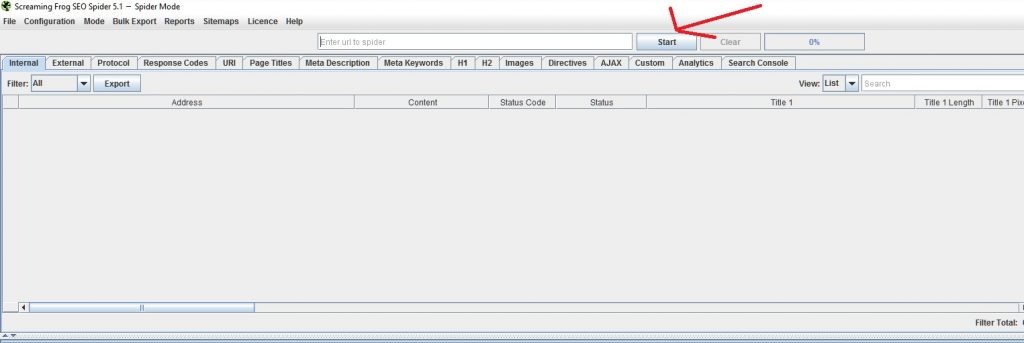

Broken links are usually caused either by errors in the target URLs of links or by linking to pages that are displaying 404 Not found server response codes. To check your site for crawl errors, you can use a tool called the Screaming Frog SEO Spider. It has a free, limited version and a full version that costs 99GBP per year. For smaller online stores, the free version should suffice. For sites with thousands of pages, you’ll need the paid version for a thorough scan.

To check for broken links using Screaming Frog, simply set the app to function on the default Spider mode. Enter the URL of your home page and click the Start button.

Wait for the crawl to finish. Depending on the number of pages and your connection speed, the crawl could take several minutes to an hour.

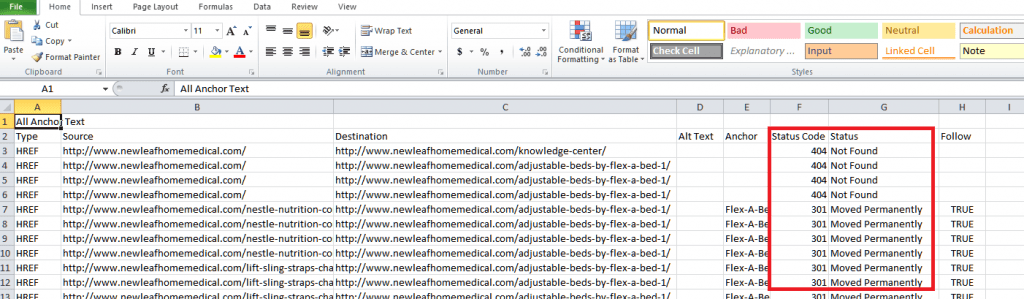

When the crawl finishes, go to Bulk Export and click on “All Anchor Text.” You will then have to save the data as a CSV file and open it in Excel. It should look like this:

Go to Column F (Status Code) and sort the values from largest to smallest. You should be able to find the broken links on top. In this case, this site only has 4 broken links.

Column B (Source) refers to the page where the link can be found and edited. Column C (Destination) refers to the URL where the link is pointing to. Column E (Anchor) pertains to the text where the link on the source page is attached.

You can fix broken links through one of the following methods:

- Fix the Destination URL – If the destination URL was misspelled, correct the typo and set the link live.

- Remove the Link – If there was no clerical error on the destination URL but the page it used to link to no longer exists, simply remove the hyperlink.

- Replace the Link – If the link points to a page that has been deleted but there’s a replacement page or another page that can sub for it, replace the destination URL in your CMS.

Fixing broken links improves the circulation of link equity and gives search engines a better impression of your site’s technical health.

Duplicate Content and SEO for Your Online Store

As mentioned earlier, online stores have a higher tendency to suffer from content duplication issues due to the number of products they carry and similarities in the names of their SKUs. When Google detects enough similarities between pages, it makes decisions on which pages to show in its search results. Unfortunately, Google’s choice of pages usually aren’t consistent with yours.

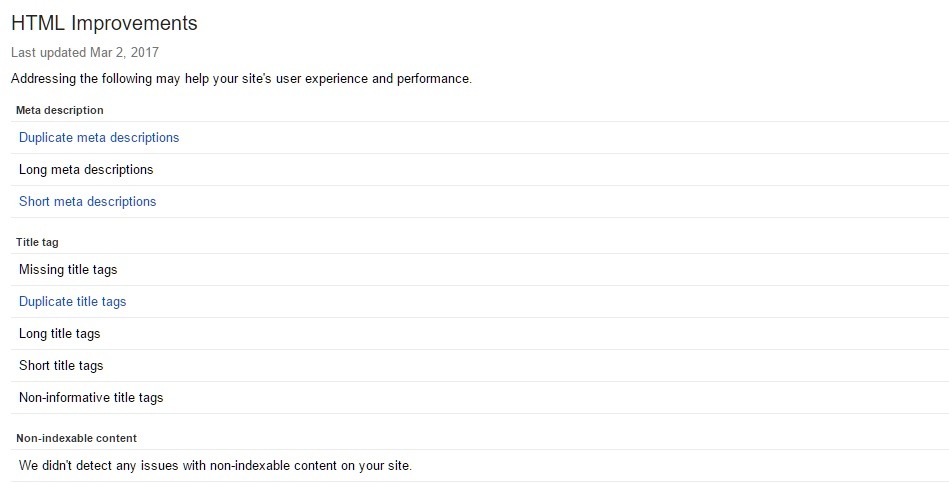

To find pages in your ecommerce site that are possibly affected by duplication problems, go to Google Search Console and click on Search Appearance in the left sidebar. Click on the HTML Improvements report and you should see something like this:

The blue linked text indicates that your site has problems with a specific type of HTML issue. In this case, it’s duplicate title tags. Clicking on this allows you to see the pages concerned. You can then export the report to a CSV file and open it in Excel.

Investigate the report and the URLs involved. Analyze why title tags and meta descriptions might be duplicating. In online stores, this could be due to:

- Lazy Title Tag Writing – In some poorly optimized sites, web developers might leave all the pages with the same title tags. Usually, it’s the site’s brand name. This can be addressed by editing the title tags and appropriately naming each page according to the essence of its content.

- Very Similar Products – Some online stores have products that have very similar properties. For example, an ecommerce store that sells garments can sell a shirt that comes in 10 different colors. If each color is treated as a unique SKU and comes with its own page, the title tags and meta descriptions can be very similar.

As mentioned in a previous section, using the rel=canonical HTML element can point bots to the version of these pages that you want indexed. It will also help search engines understand that the duplication in your site is by design.

- Accidental Duplication – In some cases, ecommerce CMS platforms could be misconfigured and run amok with page duplications. If this happens, a web developer’s help in addressing the root cause is necessary. You will likely need to delete the duplicate pages and 301 redirect them to the original.

Bonus: the pages identified as having short or long title tags and meta descriptions can be dealt with simply by editing these fields and making sure length parameters are followed. More on that in the Title Tags and Meta Descriptions section of this guide.

Site Speed

Over the years, site speed has become one of the most important ranking factors in Google. For your online store to reach its full ranking potential, it has to load quickly for both mobile and desktop devices. In competitive keyword battles between rival online stores, the site that has the edge in site speed usually outperforms its slower competitors.

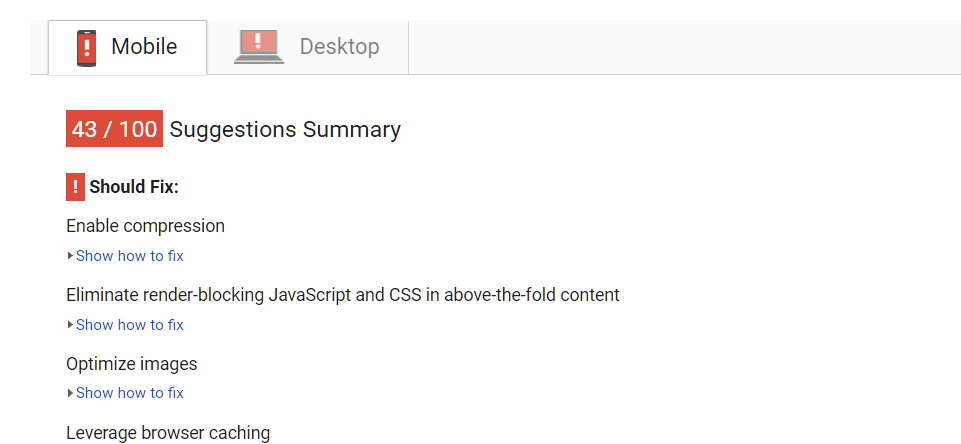

To test how well your site performs in the speed department, go to Google PageSpeed insights. Copy and paste the URL of the page you want to test and hit Enter.

Google will rate the page on a scale of 1-100. 85 and above are the preferred scores. In this example, the site tested was way below the ideal speed on desktop and mobile. Fortunately, Google provides technical advice on how to address the load time issues. Page compression, better caching, minifying CSS files and other techniques can greatly improve performance. Your web developer and designer will be able to help you with these improvements.

Secure URLs

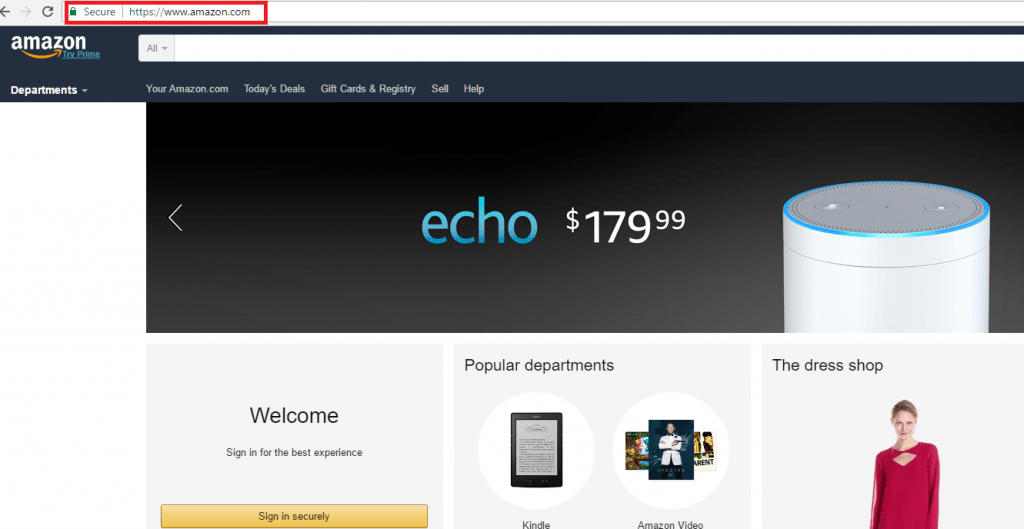

A couple of years ago, Google announced that they’re making secure URLs a ranking factor. This compelled a lot of ecommerce sites to adopt the protocol in pursuit of organic traffic gains. While we observed the SEO benefits to be marginal, the true winners are end users who enjoy more secure shopping experiences where data security is harder to compromise.

You can verify if your online store uses secure URLs by checking for the padlock icon in the address bar of your web browser. If it’s not there, you might want to consider having it implemented by your dev. To date, most ecommerce sites only have secure URLs in their checkout pages. However, more and more online store owners are making the switch to the SSL protocol.

Implementing secure URLs in an ecommerce site can be a daunting task. Search engines see HTTP and HTTPS counterpart pages as two different web addresses. Therefore, you’d have to re-create every page in your site in HTTPS and 301 redirect all the old HTTP equivalents to make things work. Needless to say, this is a major decision where SEO is just one of several factors to consider.

On-Page SEO

On-page SEO refers to the process of optimizing individual pages for greater search visibility. This mainly involves increasing content quality and making sure keywords are present in elements of each page where they count.

On-page SEO can be a huge task for big ecommerce sites but it has to be done at some point. Here are the elements of each page that you should be looking to tweak:

Canonical URL Slugs

One of the things search engines look at when gauging a page’s relevance to keywords is its URL slug. Search engines prefer URLs that contain real words over undecipherable characters. For instance, a URL like [www.clothes.com/112-656-11455] might technically work, but search engines would get a better idea on what it’s about if it looked more like [www.yourshop.com/pants]. URLs that use real words instead of alphanumeric combinations are referred to as “canonical URLs.” These are not to be confused with the earlier-mentioned rel=canonical links, which have nothing to do with URL slugs.

Most online shopping platforms make ready use of canonical URLs. However, some might not and this is something you need to look out for. Your web developer will be able to help you set up canonical URL slugs if it’s not what your CMS uses out of the box.

SEO Guide – Meta Directives

Aside from the robots.txt file, there’s another way to restrict indexing on your pages. This is by using what’s known as meta directives tags. Simply put, these are HTML instructions within the <head> part of each page’s source code which tell bots what they can and can’t do with a page. The most common ones are:

- Noindex – This tag tells search engines not to index a page. Similar to the effects of a robots.txt disallow, a page will not be listed in the SERPs if it has this tag. The difference, however, is the fact that a responsible bot will not crawl a page restricted by robots.txt while a page with noindex will still be crawled – it just won’t be indexed. In that regard, search spiders can still pass through a noindexed page’s links and link equity can still flow through those links.

The noindex tag is best used for excluding blog tag pages, unoptimized category pages and checkout pages.

- Noarchive – This meta tag allows bots to index your page but not to keep a cached version of it in their storage.

- Noodp – This tag tells bots not to list a site in the Open Directory Project (DMOZ)

- Nofollow – This tag tells search engines that the page may be indexed but the links in it should not be followed.

As discussed earlier, it’s best to be judicious when deciding which pages you should allow Google to index. Here are a few quick tips on how to handle indexing for common page types:

- Home Page – Allow indexing.

- Product Category Pages – Allow indexing. As much as possible, add breadth to these pages by enhancing the page’s copy. More on that under the “Unique Category and Product Copy” section.

- Product Pages – Allow indexing only if your pages have unique copy written for them. If you picked up the product descriptions from a catalog or a manufacturer’s site, disallow indexing with the “noindex,follow” meta tag.

- Blog Article Pages – If your online store has a blog, by all means allow search engines to index your articles.

- Blog Category Pages – Allow indexing only if you’ve added unique content. Otherwise, use the “noindex,follow” tag.

- Blog Tag Pages – Use the “noindex,follow” tag.

- Blog Author Archives – Use the “noindex,follow” tag.

- Blog Date Archives – Use the “noindex,follow” tag.

Popular ecommerce platforms such as Shopify, Magento and WordPress all have built-in functionalities or plugins that allow web admins to easily manage meta directives in a page.

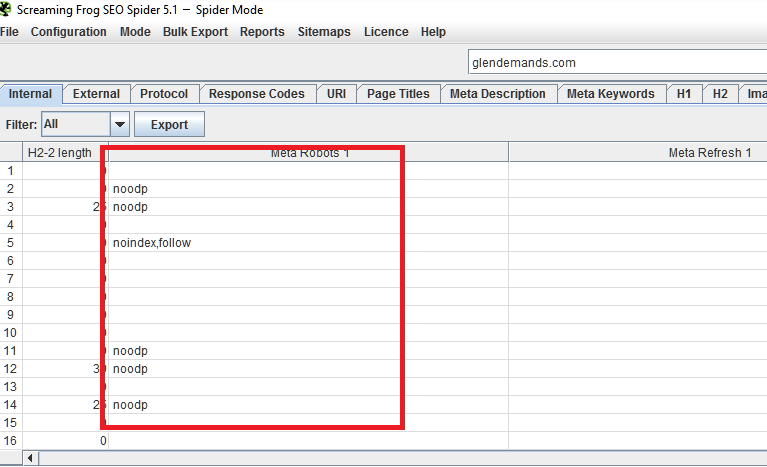

In cases where you’re wondering why a particular page isn’t being indexed or why a supposedly restricted page is appearing in the SERPs, you can manually check their source codes. Alternatively, you can audit your entire site’s meta directives list by running a Screaming Frog crawl.

After running a crawl, simply check the Meta Robots column. You should be able to see what meta directive tags each page has in your site. As with any Screaming Frog report, you can export this to an Excel sheet for easier data management.

Title Tags

The title tag remains the most important on-page ranking factor to most search engines. This is the text that headlines search result and its main function is to tell human searchers and bots what a page is about in 60 characters or less.

Due to the brevity and importance that title tags innately have, it’s crucial for any ecommerce SEO campaign to get these right. Well-written title tags must have the following qualities to maximize a page’s ability to rank:

- 60 characters or less including spaces

- Just give the gist of what the page is about

- Must mention the page’s primary keyword early on

- Must cater to buying intent by mentioning purchase action words

- Optionally,mention the site’s brand

- Uses separators such as “-“ and “|” to demarcate the title tag’s core and the brand name.

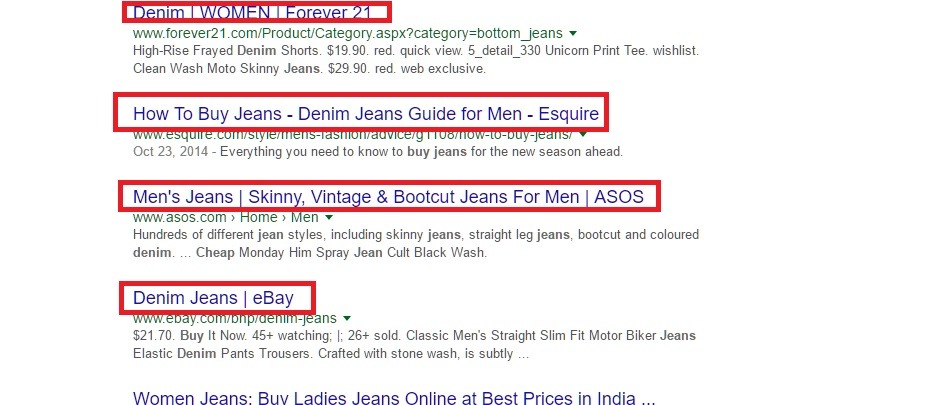

Here’s an example of a good title tag:

Buy Denim Jeans Online | Men’sWear.com

We see that the purchase word “Buy” is present but the product type “Denim Jeans” is still mentioned early on. The word “Online” is also mentioned to indicate that the purchase can be made over the Internet and the page won’t just tell the searcher where he or she can buy physically. The brand of the online store is also mentioned but the whole text block is still within the 60-character recommended length.

SEO Guide – Meta Descriptions

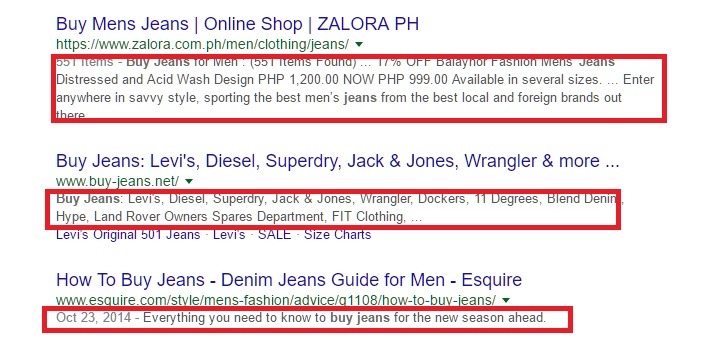

Meta descriptions are the text blocks that you can see under the title tags in search results. Unlike title tags, these aren’t direct ranking factors. As a matter of fact, you can get away with not writing them and Google would just pick up text from the page’s content that it deems most relevant to a query.

That doesn’t mean you shouldn’t care about meta descriptions, though. When written in a well-phrased and compelling manner, these can determine whether a user clicks on your listing or goes to a competitor’s page. The meta description may not be a ranking factor but a page’s click-through rate (CTR) certainly is. CTR is an important engagement signal that Google uses to see which search results satisfy their users’ intent best.

Good meta descriptions have the following qualities:

- Roughly 160 characters in length (including spaces)

- Provides users an idea of what to expect from the page

- Mentions the page’s main keyword at least once

- Makes a short but compelling case on why the user should click on the listing

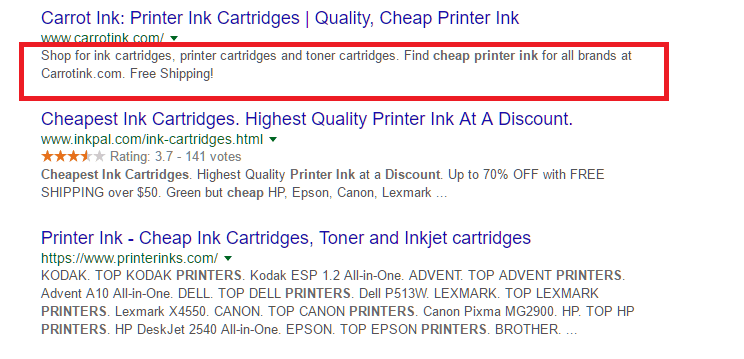

Here’s a nice sample from an online store which ranks number 1 on Google for the keyword “cheap printer inks.”

Notice how the meta description makes it clear from the start that they sell the products mentioned in the query with the phrase “Shop for ink cartridges, printer cartridges and toner cartridges.” This part also mentions the main keywords that the site targets. Meanwhile, the phrase “Find cheap printer ink for all brands at Carrotink.com. Free Shipping!” conveys additional details such as the brands covered and the value-add offer that promises free shipping. In much less than 160 characters, the listing tells shoppers looking for affordable inks that CarrotInk.com is a good destination for them.

SEO Guide – Header Text

Header text is the general term for text on a webpage that’s formatted with the H1, H2, and H3 elements. This text is used to make headline and sub-headline text stand out, giving it more visual weight to users and contextual weight to search engines.

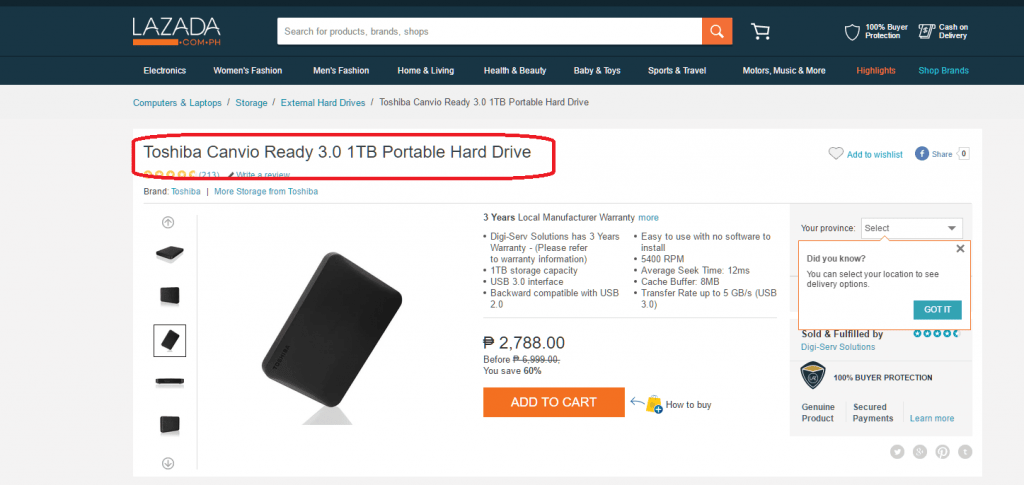

In online stores, it’s important to format main page headlines with the H1 tag. Typically, the product or category name is automatically made the main header text like we see in the example blow. Sub-headings that indicate the page’s various sections can then be marked up as H2, H3 and so on.

If your online store publishes articles and blog posts, remember to format main headlines in H1 and sub-headings in H2 and H3 as well. Effective header text is brief, direct and indicative of the context that the text under it conveys. It preferably mentions the main keyword in the text it headlines at least once.

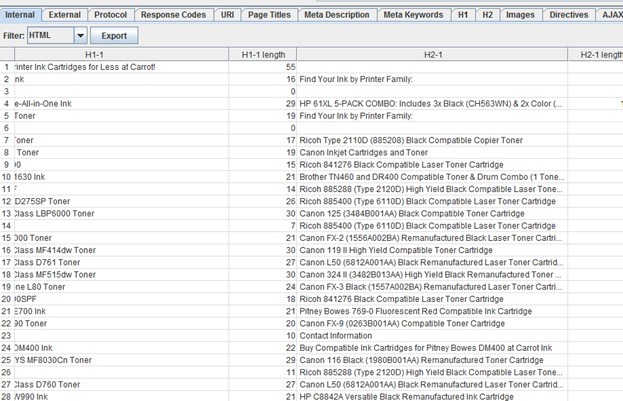

If you want to check whether all your pages have proper header text, you can do an audit with Screaming Frog. Simply run a crawl and filter the results to just HTML using the Filter drop-down on the top left section of the app window. You can find column headings that say “H1-1”and “H2-1” when you scroll right. The text there are the headings in the page.

You can export these to Excel for easy data management and reference when applying changes.

SEO Guide – Image Alt Text

Google and other search engines have gotten dramatically smarter these past two decades. They’re now so much better at understanding language, reading code and interpreting user behavior as engagement signals. What they haven’t refined to a science just yet is their ability to understand what a picture shows and what it means in relation to a page’s content.

Since bots read code and don’t necessarily “see” pages the way human eyes do, it’s our responsibility to help them understand visual content. With images, the most powerful relevance signal is the alt text (shorthand for alternate text). This is a string of text included in the image’s code that provides a short description of what’s being shown.

Adding accurate, descriptive and keyword-laced anchor text is very important to online stores since online shoppers tend to buy from sites with richer visual content. Adding alt text helps enhance your pages’ relevance to their target keywords and it gets you incremental traffic from Google Image Search.

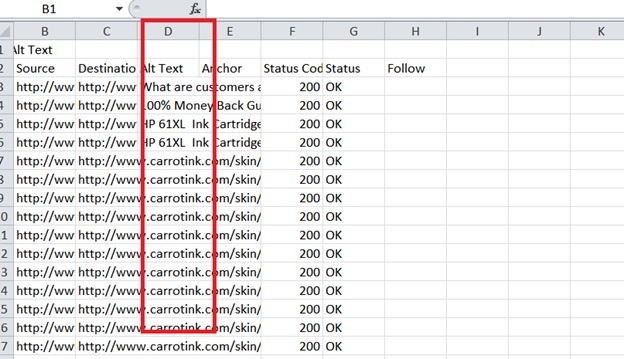

To check if your site uses proper image alt text, we can use trusty Screaming Frog once more. Do a crawl of your site from the home page and wait for the session to finish. Go to the main menu and click Bulk Export>Images>All Alt Text. Name the file and save it. Open the file in Excel and you should see something like this:

Column B (Source) tells us which page the image was found on. Column C (Destination) tells us the web address of the image file. Column D (Anchor) is where we’ll find the anchor text if something has already been written. If you see a blank cell, it means no anchor text is in place and you’ll need to write it at some point.

Good image alt texts have the following qualities:

- About 50 characters long at most

- Describes what’s depicted in the image directly in one phrase

- Mentions the image’s main keyword at least once

Different CMS platforms have different means of editing image alt texts. In a lot of cases, a spreadsheet being fed into the CMS for bulk uploads can contain image alt text information.

SEO Guide – Unique Category and Product Copy

SEO has everything to do with the age-old Internet adage “content is king” and that applies even to ecommerce websites. Analyses have continually shown that Google tends to favor pages and sites with more breadth and depth in their content. While most online store owners would cringe at the idea of turning their site into “some kind of library,” smarter online retailers know that content can co-exist with their desired web design schemes to produce positive results.

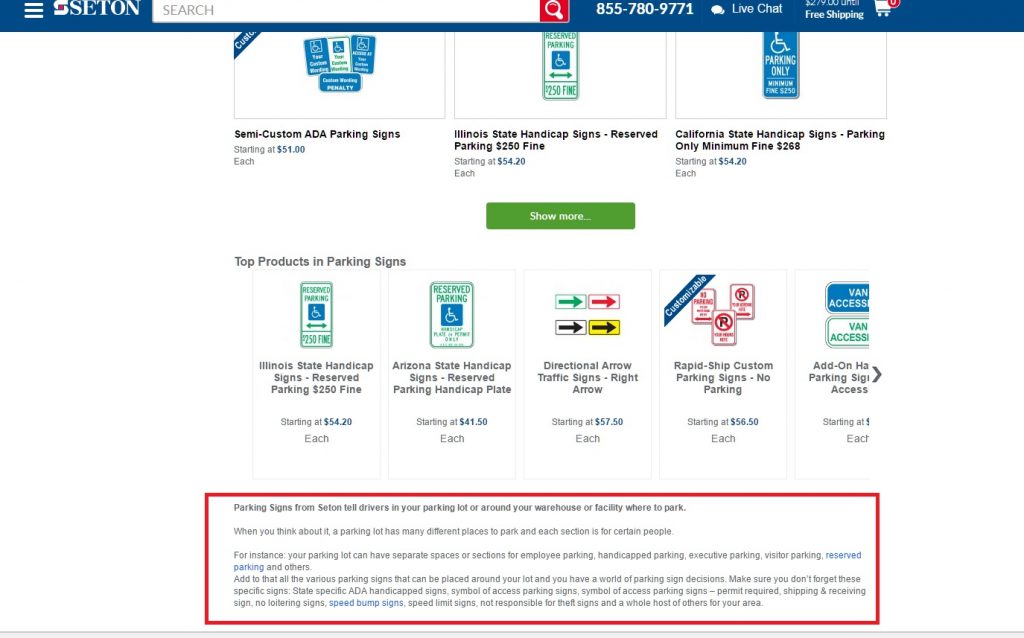

One of the fundamental flaws that a lot of ecommerce sites face from an on-page SEO perspective is the proliferation of thin content on unoptimized category pages and product pages with boilerplate content. Regular category pages have little text and no fundamental reason for existence other than to link to subcategory pages or product pages. Product pages with boilerplate content, on the other hand, don’t deliver unique value to searchers. If your online store is guilty of both shortcomings, search engines won’t look at it with much favor, allowing other pages with better content to outrank yours.

Category pages are of particularly high importance due to the fact that they usually represent non-branded, short and medium-length keywords. These keywords represent query intents that correspond with the top and middle parts of sales funnels. In short, these pages are the ones that users look for when they’re in the early stages of forming their buying decisions.

Category pages can be optimized for SEO by adding one or both of the following:

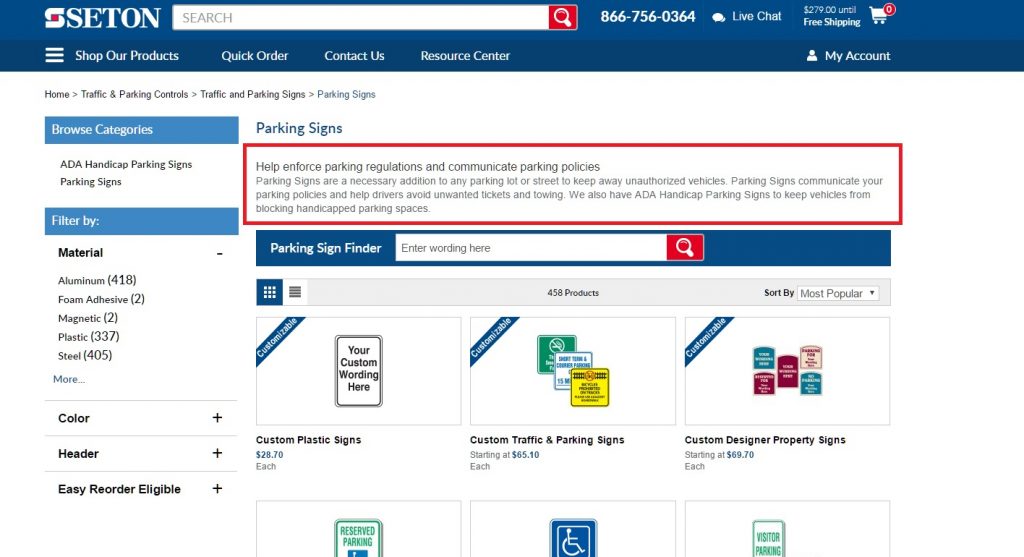

- A short text blurb that states what the category is about and issues a call to action. This is usually a 2-3 sentence paragraph situated between the H1 text and the selection of subcategory product links.

- Longer copy that further discusses the category and the items listed under it. This can be one or more paragraphs and is positioned near the bottom of the page’s body, under the list of subcategories or products in the page. This text isn’t meant for human readers as much as it is for bots to consume and understand text.

For both text block types, the goal is to increase the amount of text and provide greater relevance between the page and its target keyword. The text blocks also provide opportunities for internal linking as the text can be used as anchors for links to other pages.

Product pages, on the other hand, represent query intents that are near the bottom of the sales funnel. Product pages match the intents of users who already have a good idea of what they want to buy and are just looking for the best vendor to sell it to them. The keywords that product pages represent are typically lower in search volume but higher in conversion rate.

Optimizing the copy on product pages usually isn’t too difficult as it is work intensive. Researching hundreds or even thousands of product details, then rewriting them for uniqueness can consume a ton of man hours. Typically, SEO savvy online store owners selectively do this for just their priority product lines. Product pages that are left unpopulated with unique content are typically restricted using the noindex tag to focus link equity and crawl budget on pages that have been optimized with unique copy.

When writing unique copy for product pages, focus on writing the following in your own way:

- The Benefit Statement – The part of the copy that lists 3 or 4 bullet points stating how the product solves a problem or makes life better for the target customer.

- Use Cases – A section in the copy where you mention possible uses and applications of the product.

- How-to Section – A part of the copy where you provide instructions on how the product can be used or installed.

- Customer Reviews – The part of the copy where you allow buyers to write feedback about a product. Just make sure to allow only verified customers to post. Moderating the content for quality and readability can also be helpful.

If you want to see a good example of rich, unique and useful product page copy, here’s one.

Category and product page content can also help your pages achieve position zero on Google. This is the instant answer box that’s displayed atop everything else in some queries. If the content you wrote answers a question in a clear and direct manner, uses proper header tags, and uses proper HTML tags for bulleted and numbered lists, you may just find that your content is being featured prominently in the SERPs.

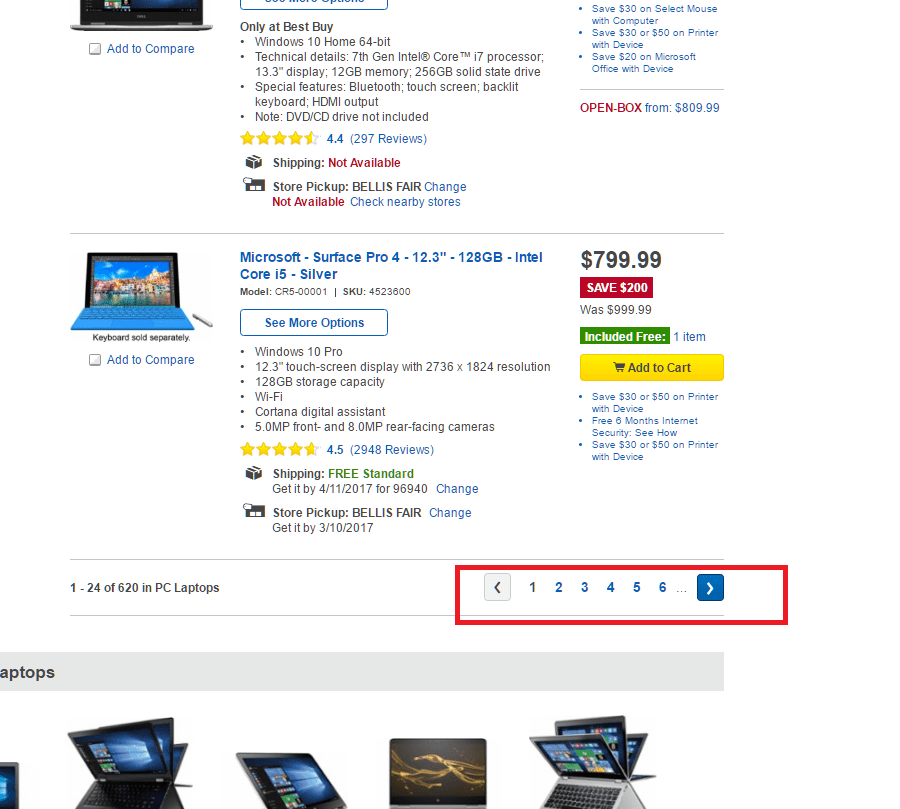

Pagination

Product category pages sometimes contain so many items that it would look awful to display them all in one page. To solve that design problem, web designers make use of paginated content. This is a method that allows a category page to display a portion of the listings under it while keeping the rest hidden. If a user wants to see the rest, he or she can click on page numbers that take them to an identical page where other items in the list are displayed.

While this works great for design and usability, it can become an SEO issue if you’re not careful. Search engines aren’t great at figuring out that a series of pages can sometimes belong to a bigger whole. As such, they have the tendency to crawl and index pages in a paginated series and view them as duplicates because of the identical title tags, meta description and category page copy displayed.

To help search engines identify a paginated series in your category pages, simply have your web developer implement the rel=”prev” and rel=”next” element in paginated content. These elements tell search engines that pages belong to a series and only the first one should be indexed. You can read about these HTML elements in greater detail here.

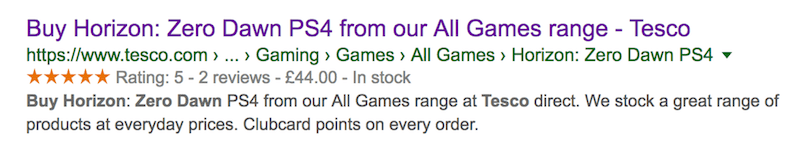

SEO GUIDE – Aggregate Review Schema

You’ve probably seen search results for products and services with review stars affixed to listings. These stars do more than just occupy space on SERPs and catch visual attention – they also encourage better clicking behavior on the part of the searchers. For online stores, having review stars appear in product page listings can be very handy. These rich snippets can be the deciding factor on whether a user clicks on your listing or your competitor’s.

Fortunately, implementing review stars is relatively easy. All you need to do is add this string of code to your product pages and you should be all set:

<script type=”application/ld+json”>

{ “@context”: “https://schema.org”,

“@type”: “Product”,

“name”: “##PRODUCT###”,

“aggregateRating”:

{“@type”: “AggregateRating”,

“ratingValue”: “##RATING##”,

“reviewCount”: “##REVIEWS##”

}

}

</script>

You’ll have to fill in the ##PRODUCT### part with the product’s name, the ##RATING## part with the aggregated review scores of the product and the ##REVIEWS## part with the number of reviews. If you don’t feel comfortable handling code, assigning this task to a web developer will get things moving in the right direction.

Of course, adding this markup is not a guarantee that Google will actually show the review stars. The search giant has a set of algorithms to follow. To help your chances of getting aggregate review stars to show up, keep the following in mind:

- Add the markup code only to specific product pages. Adding it to the ecommerce site’s home and category pages will have no effect.

- Encourage real customer reviews and avoid fabricating your own review scores.

- Try to promote a healthy balance between reviews from within the site and from external sources.

- If you’re planning to have people assign ratings to your pages at your behest, don’t do it for every product all at once. Having every page suddenly get ratings looks unnatural and may be viewed disfavorably by Google.

Note that some CMS platforms are better than others with Schema markups. WordPress, for instance, has Schema markups built in if you’re running a Genesis-based theme.

Bonus: Link Building Strategy

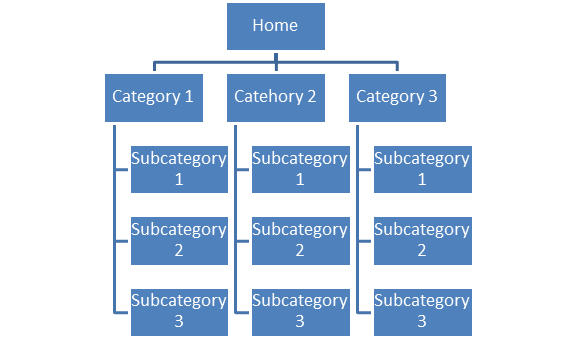

While this post is about on-site SEO, it’s inevitable for ecommerce site owners to eventually think about link building if they want to dominate their search market niche. Income Diary has posted about how to build links the right way in the past, so we won’t go into detail on that. We will, however, discuss where to point those backlinks.

Generally, websites follow information architectures that are pyramid shaped. Imagine the home page as the tip of the pyramid while its main categories and subcategories make up the next few layers below it. At the base of the pyramid are the product pages. They’re the most numerous page type but they’re at the bottom of the hierarchy.

Now, picture the pyramid as one that has an internal water distribution system. In this case, the water is the link equity. Most of it comes from the tip (the home page) since this is where most inbound links point to. The equity flows down to the category pages that are linked from the home page, then descends and distributes throughout the product pages.

Having said that, the home page is the best page to point inbound links to if your goal is to boost the ranking power of all your pages. This helps the home page rank better for the short keywords that it represents while providing incremental boosts to category and product pages.

If you happen to have a product line that you are prioritizing over the rest, it would serve you well to build links to their respective category pages. This not only helps the category page perform better in the SERPs, it also cascades more potent link equity to your product pages.

Ultimately, good SEO for online stores is all about promoting a higher degree of crawlability and relevance to your pages. Adding the these optimization tasks to your site maintenance bucket list may look like a major task, but the rewards are well worth the effort.

Author Bio: Itamar Gero has been on the net since the days it was still in black and white. Born and raised in Israel, he now lives in the Philippines. He is the founder of SEOReseller.com and has recently launched Siteoscope.com.

Featured Posts:

=> Ray Edwards Copywriter | Podcast: How to Write Copy That Sells

=> SEO GUIDE: 10 SEO Blog Post Publishing Steps that Most Bloggers Forget